Implementing Snowflake Using Azure Data Factory

BimlFlex has established an intuitive process to implement Snowflake using Azure Data Factory (ADF) for cloud based data warehousing solutions, as demonstrated in the video below.

BimlFlex - Implement Snowflake Using Azure Data Factory

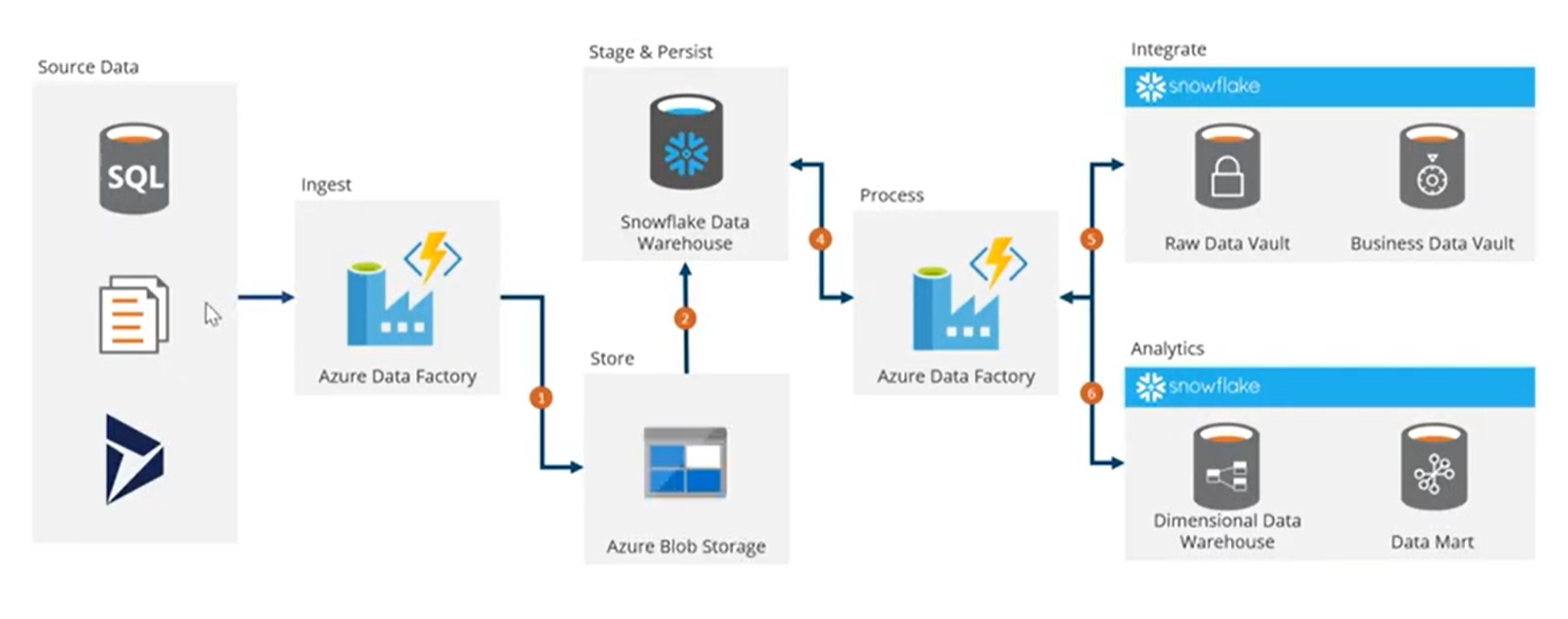

Architecture

Regardless of source data, this example will utilize ADF copy commands to ingest and land (stage) the source data in Azure Blob Storage, as a parquet file.

BimlFlex will provide logic to map the resulting files so that the generated Snowflake Stored Procedure code can load the data into the Snowflake tables.

With the source data being held in a Snowflake database, using Azure Data Factory, the data can then effectively be processed into Data Marts or Data Vaults.

Lakehouse Medallion Architecture Support

BimlFlex supports the medallion architecture pattern for Snowflake Lakehouse implementations:

| Layer | BimlFlex Implementation | Snowflake Components |

|---|---|---|

| Bronze | Staging + Persistent Staging | Stages for landing, tables for raw data |

| Silver | Data Vault or Normal Form | Integrated tables with Time Travel support |

| Gold | Data Mart / Dimensional | Optimized tables with automatic clustering |

Bronze Layer

Raw data lands via Snowflake stages (internal or external), then loads to tables. BimlFlex manages:

- Staging tables for current batch processing

- Persistent Staging Area for historical retention

Silver Layer

BimlFlex supports two approaches:

- Data Vault (recommended): Hub, Link, and Satellite patterns with full history

- Normal Form: Traditional relational modeling

Gold Layer

Dimensional models optimized for analytics:

- Star schema patterns with Fact and Dimension tables

- Automatic clustering for query performance

- Materialized views for complex aggregations

For detailed guidance on implementing medallion architecture, see the Delivering Lakehouse documentation.

Implement Snowflake using Azure Data Factory

The example is intended to follow the guide for Creating a Landing Area.

The following video walks through the common steps and considerations for deploying an Azure Data Factory for Snowflake.

Additional BimlFlex documentation regarding the implementation of Snowflake in Azure Data Factory can be referenced here.

How to Configure Snowflake in BimlFlex

Detailed prerequisites on working with Snowflake and BimlFlex are provided in the Snowflake configuration overview section. The below information assumes this configuration has been completed.

Snowflake Samples

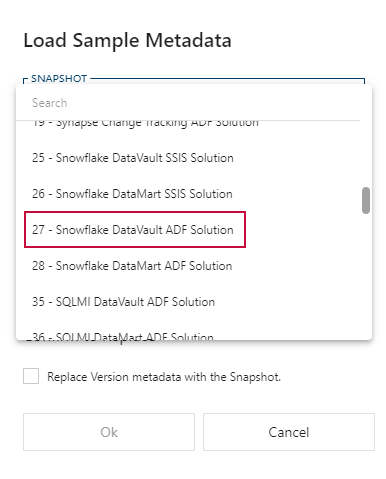

BimlFlex provides a number of metadata samples, including samples that have a specific focus on Snowflake within Azure Data Factory in this example.

Loading the sample metadata from within BimlFlex is as simple as selecting the snapshot from the Load Sample Metadata dropdown menu on the BimlFlex Dashboard.

More information on lakehouse and data modeling implementations:

As with all examples the data here is pointed at AdventureWorksLT 2012. The imported sample metadata will have modeled point in time (PIT) tables and bridge tables, and on top of those, built Data Mart tables, and Dimension- and Fact tables.

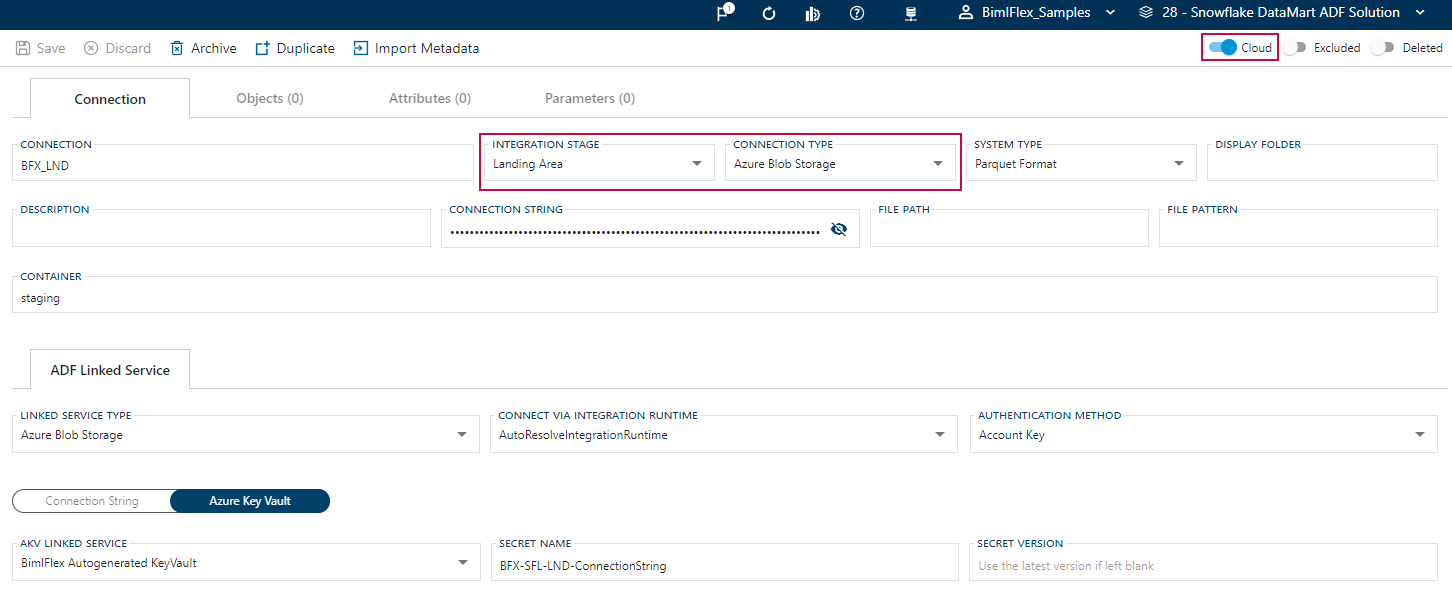

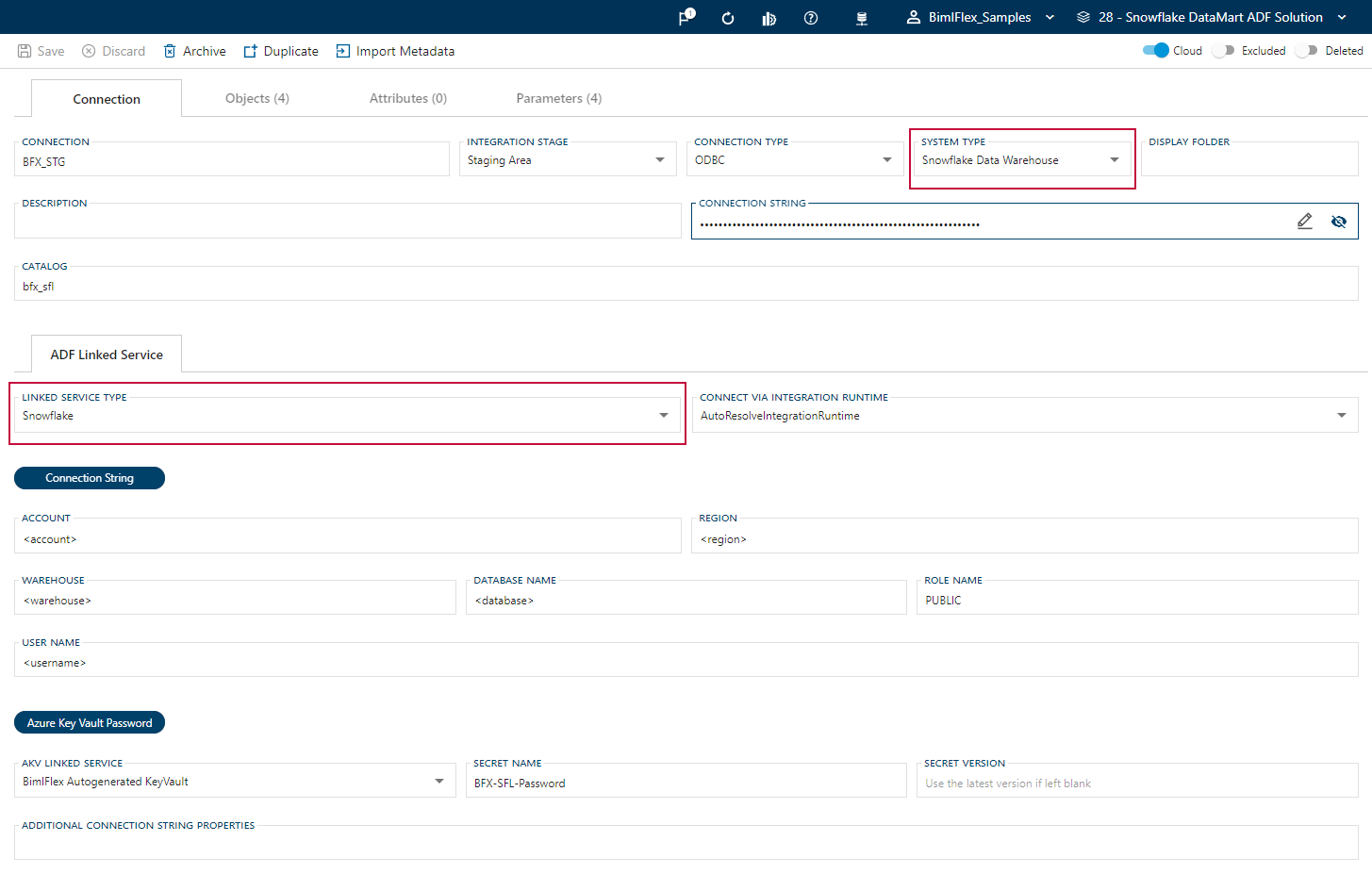

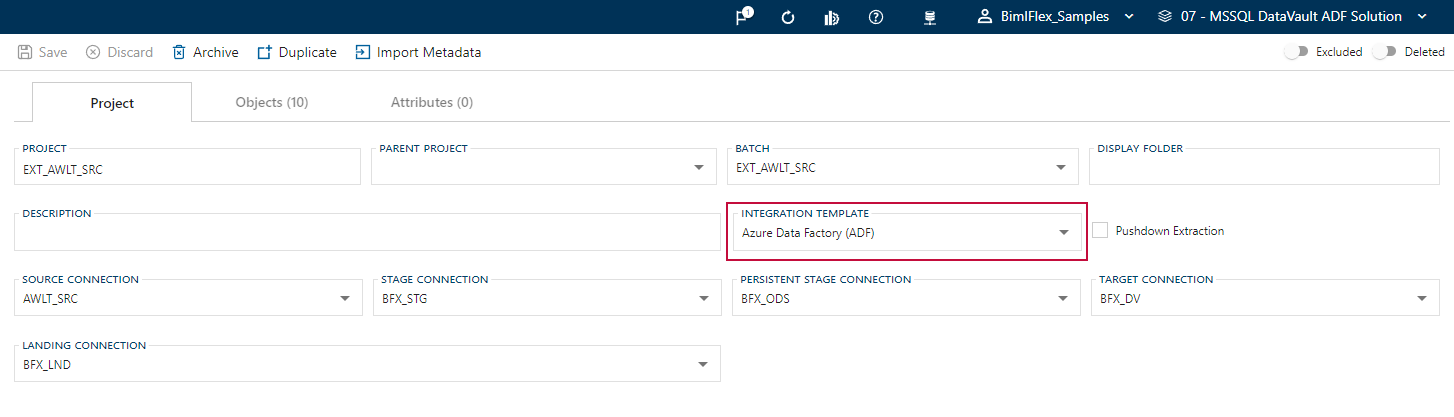

BimlFlex Standard Connections

The connection settings remain standard as per previously uploaded BimlFlex featured videos, and should be configured as follows from within the BimlFlex Connections menu:

- Source System is cloud enabled

- Staging / Landing Environment is configured for Blob Storage configured with ADF linked services

- System Type is configured for Snowflake Data Warehouse

- Linked Service Type is configured for Snowflake

- Integration Template is configured for ADF Source -> Target

The simplicity of selecting settings, paired with the intelligence of BimlFlex to execute the appropriate functions, greatly streamlines the process of converging any solution into Snowflake.

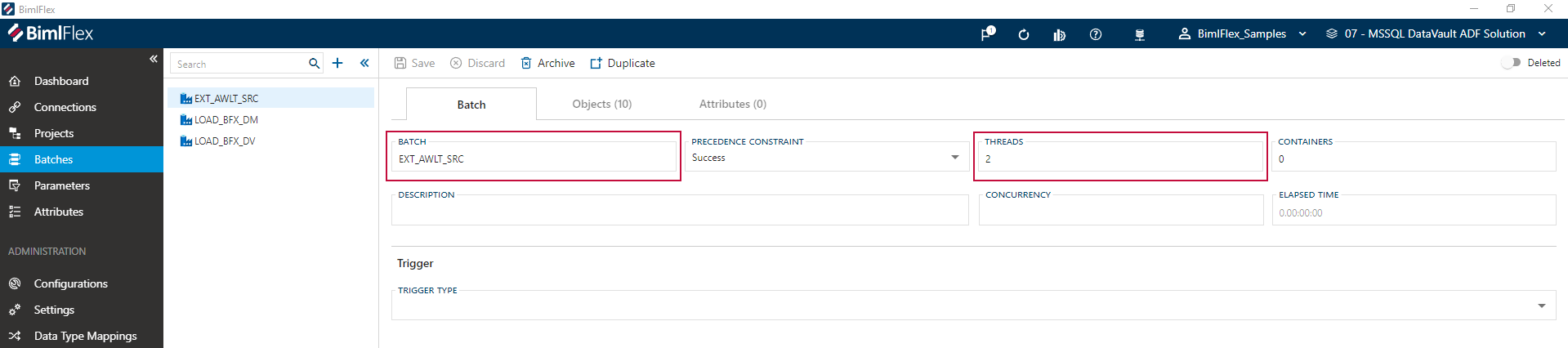

Prior to building out a solution BimlFlex also allows the opportunity to configure batches, assign batches to different compute warehouses, or adjust scaling up or down, from within the BimlFlex Batches menu.

Output

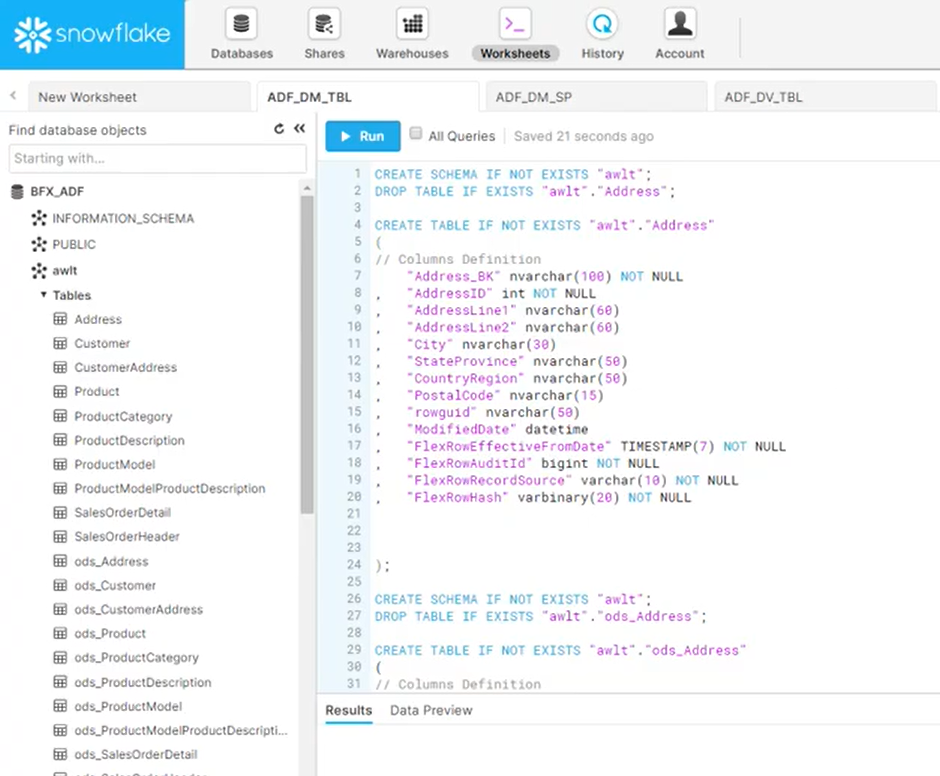

BimlFlex will provide all necessary Snowflake code, such as table, queries, and procedures definitions.

Depending on the selected Integration Template, BimlFlex will also generate supporting orchestration artifacts in either SSIS or ADF. But, because the data pipelines themselves are running fully on Snowflake using Stored Procedures, it is possible to use third party orchestration approaches or tools to direct the overall process.

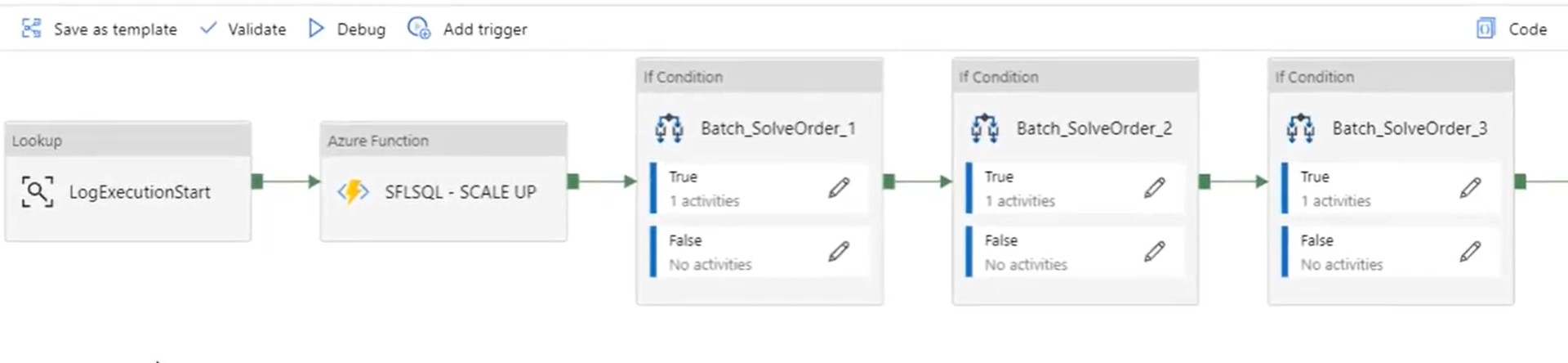

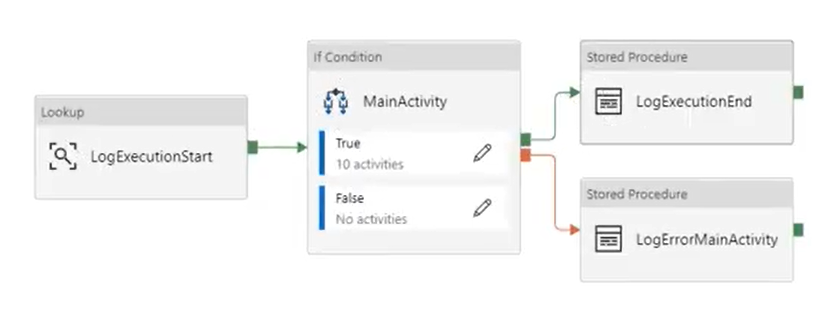

The output has been configured for Snowflake, deployed to Azure Data Factory, and visualized as such:

Having now generated a solution, which may or may not consist of multiple compute warehouses targeting different batches, the following options may be adjusted:

- Scale up or scale down

- View copy commands including completions or errors.

- Suspend a solution

Any files in error will be moved to an error folder or archived. On the next run those files will have already been processed and moved to an appropriate folder.

For additional videos relating to BimlFlex and Snowflake integration, see:

For additional documentation regarding Snowflake configuration please refer to the Snowflake Configuration Overview.