Azure Data Factory Deployment Overview

BimlFlex simplifies the creation of Azure Data Factory (ADF) data logistics processes.

ADF is the cloud-native solution for processing data and orchestrating data movement from Microsoft, and allows data processing to happen in a cloud environment - minimizing any requirements for local infrastructure.

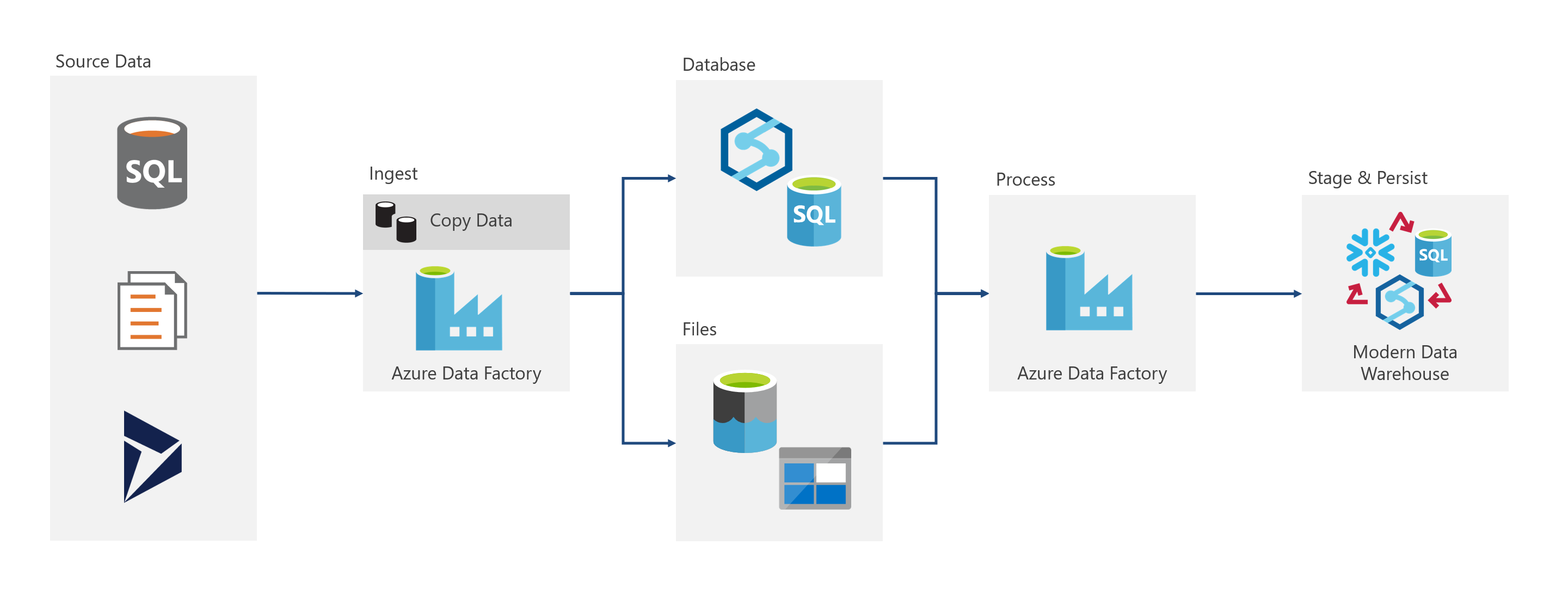

A BimlFlex solution that has been configured with the Azure Data Factory Integration Stage consists of the Data Factory, which is used to extract data from sources, and an in-database ELT processing step which loads the extracted data into target staging, persistent staging, Data Vault and Data Mart structures.

An ADF environment can be maintained using two main methods

- Azure Resource Manager (ARM) Templates

- Data Factory json files integrated into a source control repository

BimlFlex can create both of these output artifacts, allowing the designer to pick the process that works best in the current environment.

Deploying the environment through the ARM template includes everything required for the environment, including non-ADF resources when applicable, such as the BimlFlex Azure Function used to process file movements and send SQL commands to Snowflake.

Using the source control-integrated approach allows the designer to connect the Data Factory to a Git repository, where ADF resources are represented using JSON files. The designer builds these JSON files from BimlStudio, commits them to the repository and pushes them to origin, allowing ADF to show the artifacts in the Azure Data Factory design environment.

For implementations that use the Serverless Azure Synapse workspace-approach, Git integration is mandated by Microsoft, and ARM template deployments are currently not available for the integrated Data Factory. For this scenario, use the Git-integration and the individual Json files generated by BimlFlex to populate the integrated Data Factory.

Tip

The Varigence YouTube channel contains various introduction videos about using BimlFlex and BimlStudio. This video walks through the common steps and considerations for deploying an Azure Data Factory for a Staging Area. This covers metadata import as well as building and deploying the solution.

Deploying an Azure Data Factory By Example

Note

The example is intended to follow the guide for creating a Landing Area. Please refer to the initial configuration steps here.

The general architecture of the generated Azure Data Factory is below.

Building the Solution

Once the required metadata has been imported and configured, the next step is to build the solution to generate the deployable artifacts. This is done by using BimlStudio to build the solution, following the build process.

Tip

In addition to the documentation for the build process, the BimlFlex documentation also covers DDL generation and Command Line Interface (CLI) building.

Deploying the Solution

As part of the the build process, BimlFlex will output artifacts and name them using the <Output Folder> and <Data Factory Name>.

Note

Output Folder: The default output folder is <Project Working Directory>\output\.

This can be configured under the BimlStudio project properties under Build, Output Path.

Data Factory Name: Uses the AzureDataFactoryName Setting, or BimlFlex if the Setting is blank.

A PowerShell script named adf-deploy.<Data Factory Name>.ps1 will be output to the <Output Folder>\Deploy\ folder to assist with deployment. BimlFlex will automatically input the Azure Environment Settings if populated or these can be entered manually prior to script execution.

All variables and artifacts should be reviewed prior to the execution of the script as executing the script will generate billable Azure artifacts.

Important

The PowerShell script will create the Azure resources specified within the JSON files. In addition, the script will overwrite an existing object in Azure with the one currently defined JSON values if it already exists.

Even though PowerShell is used in the example, the Azure Portal can also be used.

This method uses the arm_template.json and arm_template_parameters.json files found in the <Output Folder>\DataFactories\<Data Factory Name>\ directory.

These files are aggregate of the entire Azure environment generated by BimlFlex.

Tip

For additional details on deploying your Azure Data Factory refer to the below guides:

BimlFlex will also output the JSON for each artifact individually should they be required for additional deployment methodologies.

These are found inside the <Output Folder>\DataFactories\<Data Factory Name>\<Artifact Type> directories named <Object Name>.json.

Wrapping Up

That concludes the deployment of an Azure Data Factory. If not already done, the Staging Area database would also need to be completed prior to execution of any Azure Pipelines. Additional steps for this, and the other Integration Stages can be found in the Deploying the Target Warehouse Environment section below.

If accessing an on-premise data source, an Integration Runtime will also need to be configured.

The example uses an Azure Key Vault with pre-configured Secrets. Refer to Azure Key Vault and Secrets for details on configurations.

Additional reading is provided under the Detailed Configuration to highlight initial environment requirements, alternate configurations and optional settings.

Detailed Configuration

In addition to the scenario given the example, BimlFlex support multiple Integration Stages and Target Warehouse Platforms. The following sections outline the specific considerations when Azure Data Factory across various architectures and platforms. Although features are highlight that are Azure Synapse or Snowflake specific, the following articles are only designed to highlight the Azure Data Factory implications. The referenced implementation guides should still be consulted prior to deploying an Azure Data Factory using either platform.

Tip

For additional details on Target Warehouse Platforms refer to the below guides:

Configuring a Landing Area

When using ADF to orchestrate data movement a Landing Area is required to ensure the data is in the same environment as the Target Warehouse Environment. This subject is covered in depth in the separate Configure Azure Data Factory Landing Area guide.

Configuring an ADF Project

In order to configure the Project for Azure Data Factory, simple set the Integration Template to Azure Data Factory.

BimlFlex requires that all Connections used be enabled for Cloud, ADF Linked Service configured.

If the Connections is not already, Connection Type needs to be set to OLEDB SQL Based ELT (or ODBC SQL Based ELT for Snowflake).

Note

All Projects required a Batch and at minimum a Source Connection and Target Connection.

Converting a SSIS Project

BimlFlex allows for a rapid transition from an SSIS orchestration to an Azure Data Factory orchestration by applying the above settings. Key points of consideration though are any existing SSIS Dataflow Expressions across Configurations, Settings and Data Type Mappings.

Azure Data Factory has a separate Expression Language from SSIS and as such requires separate logic and configuration.

Initial Configuration

There are some environments that will require some additional setup outside of the configuration and installation of BimlFlex. The scenarios where configuration will need to be configured outside of the BimlFlex/BimlStudio environment are listed below.

- On-premise Data Source

- Blob Storage Configured Landing Area

On-Premises Data Source

An on-premises data source will require the installation and configuration of a Self-Hosted Integration Runtime in order for the Azure Data Factory to have access to the data. The following guides are provided for additional reference material and configuration details.

Tip

For additional details on installing and configuring and Integration Runtime refer to the below guides:

- Microsoft Docs: Integration runtime in Azure Data Factory

- Microsoft Docs: Create and configure a self-hosted integration runtime Microsoft Docs: Create a shared self-hosted integration runtime in Azure Data Factory

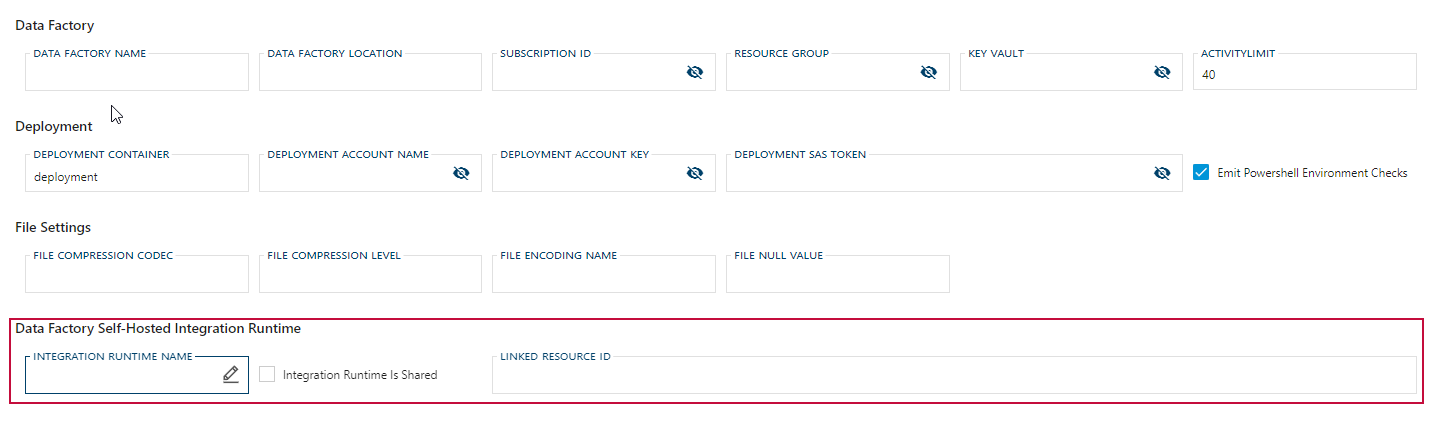

Once an Self-Hosted Integration Runtime is configured, it will need to be added to BimlFlex.

This can be done by populating the BimlFlex AzureIntegrationRuntime Setting.

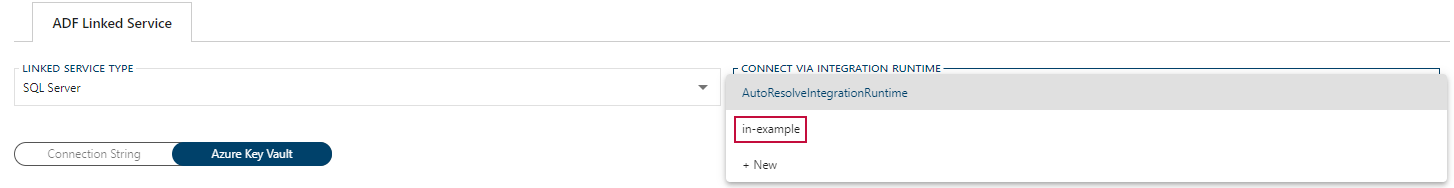

The value will then be available in the ADF Linked Service as an option under the Connect Via Integration Runtime dropdown.

If additional Integration Runtime references are needed, they can be added in the Connect Via Integration Runtime dropdown.

If a shared integration is used, add the Linked Resource Id to the metadata. This information is available from the Azure Portal from the Sharing Data Factory's Integration Runtime details. The Data Factory should also be granted access to this shared resource.

Warning

BimlFlex did not support a Shared Integration Runtime through automation prior to version 2022. If a Shared Integration Runtime is required in an earlier version it will have to be manually assigned after build.

The Microsoft Azure Data Factory Self-Hosted Integration Runtime can only connect to a single Data Factory. Most implementations will have several Data Factories that all require access to on-premises data, such as dev/test/prod environment-specific versions of the deployed BimlFlex Data Factory implementation.

It is recommended that a singular Data Factory is created to only serve the shared Integration Runtime with other Data Factories. This allows for separation of concern for the functionality the Data Factories perform. Once the sharing Data Factory has been created, share the Integration Runtime to all other Data Factories. In BimlFlex, add the information about the sharing Data Factory in Settings > Azure > Data Factory Self-Hosted Integration Runtime.

Blob Storage Configured Landing Area

Outside of configuring the appropriate settings for the Blob Containers, the appropriate Azure artifacts will first need to be created.

Creating a Storage Account

Before a Blob Container can be created, an Azure Storage Account needs to be created to host it.

The minimum requirement is one Azure Storage Account.

Depending on the needs of the environment though, as many as one Azure Storage Account per Blob Container can be created.

Tip

For additional details on creating Azure Storage Account refer to the below guide:

Microsoft Docs: Create an Azure Storage account

Creating a Blob Container

The Blob Container will be where the actual blob files are landed. A separate container will need to be created for the staging (initial landing), archive (destination after a successful stage) and error (destination after a failed stage).

Tip

For additional details on creating a Blob Container refer to the below guide:

Microsoft Docs: Quickstart: Upload, download, and list blobs with the Azure portal

Azure Key Vault and Secrets

An important part of handling sensitive information in Azure is the use and management of an Azure Key Vault.

The Azure Key Vault is a secure service for allowing the Data Factory to get connection information when needed without that sensitive information being stored in the Data Factor, or the BimlFlex metadata.

The Connections defined in BimlFlex can be configured to reference a secret stored in a Key Vault. This configuration might be the whole Connection String, or a part of the connection information. The ability to configure this depends on the connection type.

The Key Vault allows the implementation of environment specific

BimlFlex Auto-generated Key Vault

With an AKV Linked Service set to BimlFlex Autogenerated KeyVault, BimlFlex will automatically generate an Azure Key Vault with a dynamically generated name. This name will change with each build. While this approach will allow the ARM template deployment to create a placeholder Key Vault, it is recommended that a named Key Vault is created and maintained outside of BimlFlex and that the metadata uses this Key Vault name in the metadata.

Key Vault access requirements

The following is the minimum Access Policy a User Principal needs in order to update the Secrets in the Key Vault.

| Access Policy | Object | Key Permissions | Secret Permissions | Certificate Permissions |

|---|---|---|---|---|

| User | User Principal | List, Set |

Tip

For additional details on Azure Key Vaults and Sensitive Information Management refer to the below guides:

Existing Azure Key Vault

When using Azure Key Vault, all Secrets referenced in BimlFlex will have to be manually created and populated in the Key Vault, outside of BimlFlex.

The generated Azure Data Factory will required the following permissions granted in order to access the required Secrets.

A User Principal will also need the minimum Access Policy to update required Secrets.

When using Azure Synapse Analytics Workspace and its integrated pipelines, assign access rights to the Synapse Workspace Application.

| Access Policy | Object | Key Permissions | Secret Permissions | Certificate Permissions |

|---|---|---|---|---|

| Application | Data Factory / Azure Synapse Workspace |

Get, List | ||

| User | User Principal | List, Set |

Tip

For additional details on managing Azure Key Vaults refer to the below guides:

Microsoft Docs: Azure Key Vault

Microsoft Docs: About keys, secrets, and certificates

Connection String Secrets

When using an Azure Key Vault to handle a connection string, the following example can be used to assist with the creation.

Note

Provider is not supported in the ADF connection string. Ensure it is not present in the Secret.

- ADF Connection String

- SQL On-Premises Example

- SQL Azure / Synapse Example

- Snowflake Example

- Blob Container Example

# SQL Server (On-Premise)

Data Source=<server name>;Initial Catalog=<database>;User ID=<user>;Password=<password>;

# SQL Server Azure or Azure Synapse

Data Source=<server name>.database.windows.net,<port>;Initial Catalog=<database>;User ID=<user>;Password=<password>;

# Snowflake

host={Server Address};account={Account Name};user={User Name};password={Password};db={Database Name};schema={Schema Name};warehouse={Warehouse Name};

# Blob Container

DefaultEndpointsProtocol=https;AccountName={Storage Account};AccountKey={Storage Account Key};EndpointSuffix=core.windows.net

Configuring BimlFlex Settings

BimlFlex Settings are used for environment details and orchestration configurations.

ADF Settings

The following Settings are required when working with Azure Data Factory regardless of Source System or Target Warehouse Environment.

- Azure\Data Factory

- Configures the DATA FACTORY NAME, SUBSCRIPTION ID, and RESOURCE GROUP

- Optional configurations for an existing KEY VAULT or INTEGRATION RUNTIME

- Staging Naming\Naming

- Configures the naming convention for

External TablesandLanding Tables

- Configures the naming convention for

These below Settings are only required when using a Linked Template ARM Deployment in ADF.

- Azure\Deployment

- Configures the name of the Account, Container and Key/Token to be used with the Linked Template Deployment

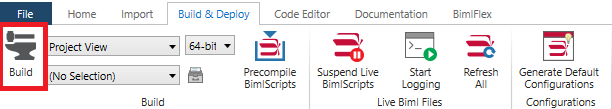

Building the Project

Once the required metadata has been imported and configured the next step is to build the solution to generate the deployable artifacts. Once a BimlStudio project is setup, this can be done simply by click the Build shortcut button.

Alternatively the Build & Deploy tab can be selected to click the Build button.

Tip

For additional details on Building a Solution refer to the below guides:

The process outlined is know as an Interactive Build, but a Command Line Build can also be configured to assist with automation and a Continuous Integration/Continuous Delivery framework.

Deploying Target Warehouse Environment

BimlFlex will generate the required SQL scripts for the deployment of all the tables, stored procedures, and the Data Vault default inserts (Ghost Keys). Once generated the scripts can be deployed to the required database.

Any one of the following methods can be used to deploy the Target Warehouse Environment.

A part of the Build process, BimlStudio will generate a SQL Server Data Tools (SSDT) project for a Microsoft SQL Target Warehouse Platform.

By default a SSDT deployment file named ssdt-deploy.<DatabaseName>.ps1 is created and placed in the ...\<Output Folder>\Deploy\ folder for each database in the target warehouse environment.

The SSDT project will have all the required tables, stored procedures and Data Vault default inserts required for the project.

Important

It is HIGHLY recommended when targeting a Azure SQL Database or Synapse Database that it is created manually in Azure prior to executing the ssdt-deploy PowerShell script.

The generated SSDT project does not contain any configuration information for the Azure settings.

This will result in the database being created with whatever defaults Microsoft is currently using, including a potentially higher than necessary Performance Level.

Alternatively the generated SSDT project can be used to create a deployment script or generate a DACPAC that can be deployed like a normal Data-tier Application package.

Tip

For additional details on PowerShell deployment refer to the below guide:

Deploying Orchestration

BimlFlex automatically generates the orchestration artifacts as part of the standard build process.

When using ADF this correlates to the generation of the required ARM Template and parameter files for the Azure Artifacts used in the orchestration process.

When requiring a Azure Function Bridge, such as with a Snowflake Deployment, these artifacts will automatically be generated and included.

Note

The files included are only generated objects. If an existing Azure Key Vault is specified, no Azure artifact will be created.

In addition, BimlFlex will also generate a JSON file for each Azure artifact should a process require the individual objects to be source controlled.

Any one of the following methods can be used to deploy the orchestration.

As part of the the build process, BimlFlex will output artifacts and name them using the <Output Folder> and <Data Factory Name>.

Note

Output Folder: The default output folder is <Project Working Directory>\output\.

This can be configured under the BimlStudio project properties under Build, Output Path.

Data Factory Name: Uses the AzureDataFactoryName Setting, or BimlFlex if the Setting is blank.

A PowerShell script named adf-deploy.<Data Factory Name>.ps1 will be output to the <Output Folder>\Deploy\ folder to assist with deployment.

BimlFlex will automatically input the Azure Environment Settings if populated or these can be entered manually prior to script execution.

All variables and artifacts should be reviewed prior to the execution of the script as executing the script will generate billable Azure artifacts.

Important

Ensure the script and JSON artifacts are reviewed prior to deployment.

The PowerShell script will create the Azure resources specified within the JSON files.

In addition, the script will overwrite an existing object in Azure with the one currently defined JSON values if it already exists.

- File Location and Structure:

{Output Folder}\DataFactories\{Data Factory Name}\- arm_template.json <== Standard ARM Template File

- arm_template_parameters.json <== Standard ARM Template Parameter File

Tip

For additional details on deploying ADF, please also refer to our guide covering deployment through PowerShell.